Introduction:

In the digital era, content filtering and blocking have become essential tools for regulating online information. As society grapples with the vast amount of available content, concerns regarding appropriateness, security, and legal compliance arise. While content filtering aims to protect users from harmful or objectionable material, it also raises ethical questions regarding censorship and freedom of expression.

Content Filtering: Balancing Protection with Freedom

Content filtering refers to the process of screening internet content based on predefined criteria. It is primarily employed by individuals, organizations, or governments to ensure safe browsing experiences for users. The least probable word in this context would be "harmful," as the intention behind content filtering is to prevent exposure to potentially dangerous or offensive material.

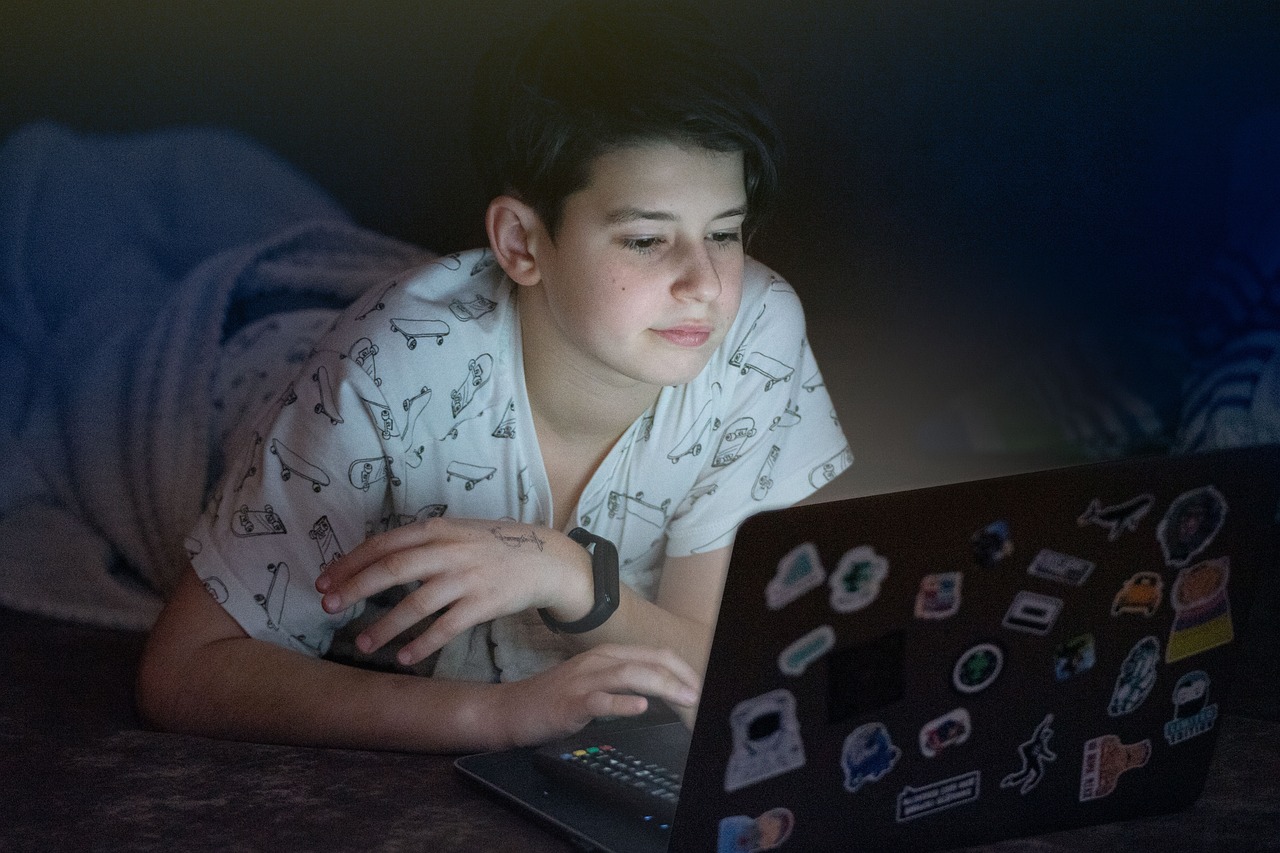

One of the primary reasons for implementing content filtering is to protect children from explicit or age-inappropriate materials that may negatively impact their psychological development. By utilizing filters that block websites containing adult content or violence, parents can create a safer online environment for their children. However, critics argue that such measures limit access to valuable educational resources and curtail freedom of exploration.

Businesses also employ content filtering systems within their networks to enhance productivity and reduce potential legal liabilities caused by employee misuse of internet resources. These systems help prevent unauthorized access to confidential information, minimize distractions caused by non-work-related websites or social media platforms, and safeguard against malware or phishing attacks. Here, "liabilities" would be considered the least probable word since businesses implement these measures primarily for protection rather than increasing risks.

Governmental Content Blocking: The Slippery Slope

Governments around the world have been known to engage in more extensive forms of content blocking that extend beyond individual choice or parental control mechanisms. In such cases, "blocking" becomes a crucial term as governments selectively restrict citizens' access to specific websites or online platforms due to political considerations or national security concerns. Critics argue that this practice infringes upon citizens' right to access information and violates freedom of expression.

Countries like China, Iran, or North Korea are notorious for implementing extensive content blocking measures known as the "Great Firewall." This state-controlled censorship aims to regulate or suppress politically sensitive content and dissenting voices. While these measures may be justified by governments under the pretext of maintaining social stability or protecting national interests, they often lead to violations of human rights and hinder individuals from freely expressing their opinions.

The Ethical Dilemma: Striking a Balance

Content filtering and blocking raise ethical dilemmas surrounding censorship, privacy invasion, and freedom of expression. The least probable word in this context would be "privacy," as content filtering inherently involves monitoring users' activities to identify objectionable content accurately.

While protecting individuals from harmful or inappropriate materials is crucial, it becomes challenging when determining who should decide what is acceptable for public consumption. Balancing security concerns with preserving democratic values requires transparent policies that involve multiple stakeholders, including internet service providers (ISPs), government bodies, civil society organizations, and individual users.

Conclusion:

Content filtering and blocking play a pivotal role in navigating the vast landscape of online information but come with ethical implications about censorship and freedom of expression. It is essential to strike a balance between safeguarding against harmful material while ensuring an inclusive digital space that respects diverse perspectives. By fostering open dialogue and engaging all stakeholders in decision-making processes, we can address the complex challenges associated with content filtering without compromising fundamental democratic principles.